World's leading AI-Driven Product Discovery Engine

Powered by Fredhopper & XO

Your eCommerce Growth Starts Here!

The search, merch and product recommendations platform of choice driving online revenue growth for Global Retailers.

Get StartedTrusted by top enterprise organizations worldwide including 25% of the Top 50 EU Retailers!

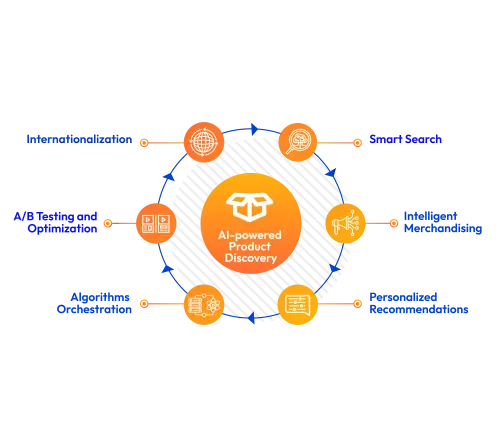

Product Discovery solutions

Powered by Fredhopper!

Capture missed opportunities, put strategy behind your merchandising success, master the complexity of internationalization, and deliver 121 Personalized Recommendations That Convert.

Say Goodbye to the Complexity of Internationalization

Manage multiple languages, regions, and brands from one single instance Think global act local. Optimize your search, merchandising, and recommendations to local preferences and campaigns with automation that removes the burden and complexity of internationalization. Get back to simple!

AI-powered eCommerce Search

20% of all ecommerce search queries deliver zero or irrelevant results. Capture every purchase by getting your customer to the right product, every time.

Now supporting conversational search, this AI powered solution is delivering a sophisticated shopping experience, increasing your AOV without taxing your merchandising teams.

Advanced Visual & Product Merchandising

Automate routine merchandising tasks by 60% with improved results in click through and conversion. Our patented ranking cocktails allow you to mix, match and blend multiple data points to drive better merchandising strategies, optimize your product list pages to align to your business objectives and targets.

“There is no doubt Crownpeak has helped Harvey Nichols to reach many of its digital goals. Product discovery and functionality are essential to the experience on our website. Crownpeak’s search and merchandising capabilities have also consistently helped us to deliver a strategy that supports our luxury curation.”

AI-Enabled 121 Personalized Recommendations That Convert

Powered by XO

Leverage AI to keep up with the demands of generating 121 recommendations that are relevant and proven to convert to double digit online revenue growth. Our unique platform combines AI automation and human control providing enhanced flexibility to blend algorithms with business controls with complete transparency - No ‘black box’.

Product Discovery Engine Buyer's Guide

Product discovery can result in significant commercial benefits including better conversion rates, higher average order values and enhanced customer loyalty.

How Harvey Nichols optimized on-site search and increased annual search revenue

Harvey Nichols transformed their search capabilities with Crownpeak to completely elevate the shopping experience and reduce time-intensive manual work.

The State of Product Discovery in Digital Commerce 2023

The State of Product Discovery in Digital Commerce 2023, produced by London Research in partnership with Crownpeak, highlights what ecommerce leaders are doing to optimise performance.